Artificial Intelligence (AI) is a discipline within computer science focused on developing computer systems capable of simulating human intelligence. This might sound like something out of a science fiction novel, but AI is all around us, powering devices and technologies we use daily, from smartphones to digital assistants and self-driving cars.

The concept of AI isn’t new. The idea of creating machines capable of thinking like humans, a concept at the heart of AI research, has existed since ancient times. However, it wasn’t until the mid-20th century, specifically in 1956 at the Dartmouth Conference, that the term “Artificial Intelligence” was coined and the field was officially born. Since then, AI has evolved and expanded, moving from simple rule-based systems to complex machines that can perform tasks that normally require human intelligence.

In this article, we will delve into the world of AI, exploring its various types, how it works, its applications, advantages, disadvantages, and the ethical considerations surrounding its use. Whether you’re a seasoned tech enthusiast, a curious student, or someone interested in understanding the AI buzzword, this comprehensive guide emphasizing artificial intelligence technology is meant for you. Let’s embark on this journey to understand the fascinating world of Artificial Intelligence.

Examples of AI

Artificial Intelligence is more prevalent in our daily lives than we often realize, functioning in areas that traditionally require human intelligence. Here are some examples of AI that many of us interact with regularly, demonstrating the widespread integration of AI and machine learning into daily life.

Generative AI Tools

Generative AI tools, such as ChatGPT, leverage machine learning techniques to generate a diverse array of content. These innovative tools provide a broad spectrum of creative opportunities, spanning from composing music to generating text for various purposes. For example, Jasper, an advanced AI writing assistant, stands out for its ability to create compelling and high-quality content tailored to different fields and industries.

Digital Assistants

Digital assistants such as Amazon’s Alexa, Google Assistant, and Apple’s Siri leverage artificial intelligence (AI) algorithms based on natural language processing (NLP) to comprehend and execute voice commands. Through sophisticated machine learning models, these assistants interpret queries, schedule reminders, curate music playlists, and precisely operate IoT-enabled smart home devices.

Self-Driving Cars

Self-driving vehicles, exemplified by innovations from Tesla and Waymo, autonomously use artificial intelligence for road navigation, traffic signal detection, and obstacle avoidance. Utilizing an array of sensors, cameras, and sophisticated machine-learning algorithms, these vehicles execute rapid decision-making processes during road operations.

Wearables

Wearable technologies, such as fitness trackers and smartwatches, utilize artificial intelligence for health metric monitoring, including heart rate, sleep quality, and physical activity assessment, showcasing how the use of artificial intelligence can enhance personal healthcare. These devices offer tailored analyses and suggestions to enhance user health and overall well-being.

Social Media Algorithms

Social media platforms utilize AI algorithms to curate and personalize content for their users based on their preferences and interactions. For example, Facebook harnesses AI to identify faces in photos and suggests tagging friends and family members. Similarly, Twitter’s AI algorithms analyze user behavior to recommend tweets that align with their interests and engagement patterns.

Chatbots

Chatbots on websites and customer service platforms utilize artificial intelligence (AI) for real-time comprehension and response to user inquiries. These AI-driven bots demonstrate multifunctionality, encompassing tasks like addressing frequently posed queries, scheduling appointments, and managing orders.

How Does AI Work?

Artificial Intelligence operates by combining large amounts of data with intelligent algorithms, a method that underscores how deep learning techniques allow the software to learn automatically from patterns and features in the data. AI is a broad discipline with many methods, but several key components drive its functioning.

Machine Learning

Machine Learning (ML) is a core part of AI and is a subset of Artificial Intelligence. It’s a type of artificial intelligence that allows systems to learn and improve from experience without being explicitly programmed. ML focuses on developing computer programs that can access and use data to learn for themselves. Learning begins with observations or data, such as direct experience or instruction, to look for data patterns and make better future decisions, epitomizing the fundamental principle of machine learning and deep learning.

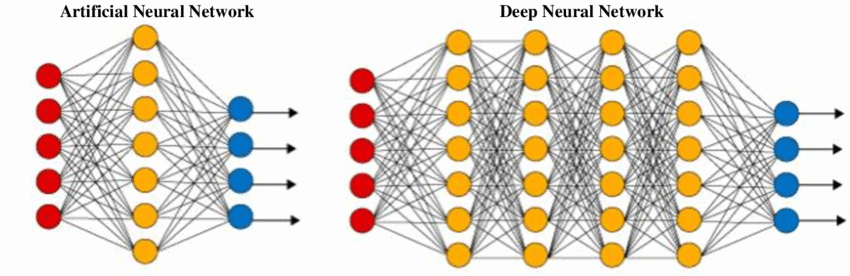

Artificial Neural Networks

Neural networks are a set of algorithms modeled loosely after the human brain, designed to recognize patterns. They interpret sensory data through machine perception, labeling, or clustering of raw input. These networks, employing deep learning techniques, can identify complex patterns and manage and classify data with the same or less error than humans. They are used to extract meaningful data from a vast amount of information.

At the core of neural networks are two key elements: weights and biases. Weights are parameters that transform input data within the network’s layers, determining the connection strength between neurons. Learning in a neural network involves adjusting these weights based on output errors. Biases, on the other hand, are added to the weighted input before passing through a neuron’s activation function. They provide a weighted sum adjustment to ensure neural activation, even with zero inputs. This adjustment, enabled by deep learning techniques, helps fit data better, especially non-origin data. When training artificial neural networks, weights and biases are tuned to minimize the output-target difference, which is crucial for neural network effectiveness.

Deep Learning

Deep Learning is a subset of machine learning that focuses on algorithms that draw inspiration from the brain’s structure and functions, known as artificial neural networks. These networks imitate the human brain’s neural connections to process information. One key aspect of Deep Learning is supervised learning, where AI models are trained with labeled data, and unsupervised learning, where models identify patterns from unlabeled data autonomously. The ability of deep learning models to analyze vast amounts of labeled data and extract features directly from the data, eliminating the need for manual feature extraction, marks a significant advancement in the field of artificial intelligence.

Convolutional Neural Networks (CNNs) and Recurrent Neural Networks (RNNs) are among the most powerful Deep Learning algorithms. CNNs excel in visual imagery analysis, recognizing patterns at different levels. They are ideal for image recognition, medical analysis, and NLP. RNNs manage sequential data for tasks like speech recognition and translation. Their ‘memory’ capability allows for data sequence processing, providing context for analysis. These algorithms drive innovation in AI applications across various fields.

Natural Language Processing

Natural Language Processing (NLP) is a captivating branch of artificial intelligence (AI) that leverages AI models to enable computers to comprehend, interpret, and generate human language. By merging principles from various fields, such as computer science, linguistics, cognitive psychology, and information engineering, NLP serves as the bridge between human communication and machine understanding, revolutionizing how we interact with technology.

Computer Vision

Computer Vision, a subset of machine learning, is the science that aims to give a similar, if not better, capability to a machine or computer. Computer vision is concerned with the automatic extraction, analysis, and understanding of useful information from a single image or a sequence of images. It involves the development of a theoretical and algorithmic basis to achieve automatic visual understanding.

By combining these approaches, AI can transform industries and sectors by analyzing real-time data, optimizing logistics, predicting maintenance, and improving customer service. The potential for both AI and humans in this data-driven world is vast – but only if we understand how to harness it responsibly.

Types of Artificial Intelligence

Artificial Intelligence can be categorized into different types based on their capabilities and the level of sophistication in their functioning. Here are four main types of AI:

Reactive Machines

Reactive machines are the most basic type of AI systems. They are purely reactive and cannot form memories or use past experiences to inform current decisions. They react to a set of inputs with a set of programmed outputs. IBM’s chess-playing supercomputer, Deep Blue, which beat the world champion Garry Kasparov in the 1990s, is an early example of AI applications in gaming.

Limited Memory

Limited memory AI, representing an advancement in AI techniques, refers to machines that, in addition to having the capabilities of reactive machines, can also learn from historical data to make decisions. Most AI systems we interact with today, including self-driving cars and recommendation algorithms that suggest what you might like based on your past behavior, fall into the category of practical AI applications.

Theory of Mind

Theory of mind AI is a more advanced type of AI that has yet to be fully realized. These systems, envisaged in the domain of AI research, would understand that entities in the world can have thoughts and emotions that affect their own behavior, an aspect of general AI. This type of AI doesn’t exist yet, but it would be capable of understanding and interacting socially, just like humans.

Self-Awareness

Self-aware AI is the final stage of AI development and refers to AI systems that have their own consciousness and are self-aware. These machines will understand their own states, predict the feelings of others, and make abstractions and inferences. While this concept is largely theoretical at this point, it represents the ultimate goal of many AI researchers, underscoring the drive to use AI for groundbreaking developments.

Strong AI vs Weak AI

In the realm of artificial intelligence, we often hear terms like Weak AI and Strong AI. These terms, coined by philosopher John Searle, help us understand the different levels of machine intelligence, contributing to the foundational knowledge in AI research.

Weak AI

Weak AI, also known as Narrow AI, refers to AI systems designed to perform a specific task, such as voice recognition, recommendation systems, image recognition, etc. These systems are incredibly advanced and capable of meeting their prescribed parameters. Still, unlike general AI, which aims for broader intellectual capabilities, Weak AI operates within predefined boundaries and cannot operate beyond the tasks that it is specifically designed for. This type of AI includes some of the most popular AI systems, such as Siri, Alexa, and Google Assistant, fall into this category.

While developing strong AI could revolutionize many aspects of our lives and society, it poses significant ethical and philosophical questions. As we continue to advance in the field of AI, these distinctions and the discussions around them will only become more critical.

Strong AI

Strong AI, also known as artificial general intelligence (AGI), is a kind of AI capable of understanding, learning, and applying knowledge, much like a human being. This type of AI can perform any intellectual task that a human can do. It can understand, interpret, and reason based on the data it encounters. However, as of now, strong AI is still a concept of science fiction, with no existing systems achieving this level of capability despite the progress in artificial intelligence technology.

What are the Advantages and Disadvantages of Artificial Intelligence?

Artificial intelligence has significant advantages and disadvantages. Understanding these is important for making informed decisions about implementing and using AI technology.

Advantages of AI

- Increased Efficiency and Throughput: AI can operate without rest and is immune to the physical limitations that humans can experience, increasing efficiency and throughput.

- Reduced Human Error: The phrase “human error” was born because humans make mistakes from time to time. Computers, however, do not make these mistakes if programmed properly.

- Takes Over Repetitive Tasks: Tasks that are monotonous in nature can be carried out with the help of machine intelligence. Machines think faster than humans and can multi-task for the best results.

- Risk Assessment: AI could be used in areas such as mining and space exploration, where human safety would be at risk.

- Data Analysis: AI can help to analyze data and predict future trends quickly.

Disadvantages of AI

- High Costs of Creation and Maintenance: AI comes with a high cost for creation and maintenance due to its complexity and the need for advanced hardware and software.

- Job Loss: With AI taking over a lot of manual work, there is a risk that a large number of jobs could be taken over by AI, leading to job displacement.

- Lack of Creative Thinking: AI lacks the ability to think creatively or outside the box. They can only do what they are programmed to do.

- Dependency on Machines: With the increment of technology, humans have become overly dependent on devices. In the case of a breakdown, humans can be severely handicapped due to the loss of skills caused by the overuse of AI.

- Ethical Concerns: There are many ethical concerns surrounding AI, such as the potential for AI to be used in a harmful manner or for AI to make decisions that humans disagree with.

Balancing these advantages and disadvantages will be critical as we continue to see AI develop and become an even more prominent part of our daily lives.

AI Ethics, Regulations, and Governance

Artificial intelligence’s rapid advancement and increasing integration into various aspects of our daily lives necessitate ongoing discussions and considerations about AI ethics, regulations, and governance, especially in the context of technologies like ChatGPT. Ethical concerns include ensuring fairness, transparency, and accountability in AI systems to prevent the perpetuation or exacerbation of existing biases and infringements on individual rights or privacy. Furthermore, determining responsibility when an AI system’s decision leads to harm is a complex issue that requires careful consideration.

Regulations for AI, which may encompass data privacy, cybersecurity, and intellectual property rights, among others, aim to safeguard individuals and society while encouraging innovation and growth in AI. However, the fast-paced nature of technological advancements and the global nature of AI development pose significant challenges in creating effective regulations. In 2022, the White House introduced the “AI Bill of Rights,” setting ground rules for artificial intelligence use.

Governance in AI involves the processes and structures that organizations use to oversee their AI use, including setting strategic direction, monitoring performance, managing risks, and ensuring compliance with ethical standards and regulations. Establishing robust governance frameworks can help organizations navigate the ethical and regulatory challenges associated with AI and ensure their AI initiatives align with their overall business goals and societal responsibilities.

In short, AI’s ethics, regulations, and governance are critical areas that require continued attention and thoughtful action as we navigate this transformative technology’s future.